The very first computers used vacuum tube analog technology and gave off tremendous amount of heat.

In the 1960's mainframe computers switched to transistor technology that required many of them to circulate liquid inside the computer to cool them.

The super computers of the 70s and 80s (Crays, for example) used complex liquid cooled technology. However, microprocessor technology advancements have led to very high performance at more reasonable heat output.

Servers today can be cooled by moving air and rejecting the heat through cooling towers and chillers and do not need liquid in the servers or on the processors to cool.

The power profile of mainstream 2U servers has stayed around 150W. This thermal design power (TDP), sometimes called thermal design point, has been in place for roughly the last 10 years.

This is because it's reasonable to get the heat out of a 2U chassis and it's possible to cool them with traditional data center design of precision cooling technologies.

Launch of AI demands improved cooling data center design to meet its processing power

Now we have an application that is placing massive demands on processing in data centers – artificial intelligence (AI).

AI has begun to hit its stride, springing from research labs into real business and consumer applications.

Because AI applications are so compute heavy, many IT hardware architects are using GPUs as core processing or as supplemental processing. The heat profile for many GPU based servers is double that of more traditional servers with a TDP of 300W vs 150W.

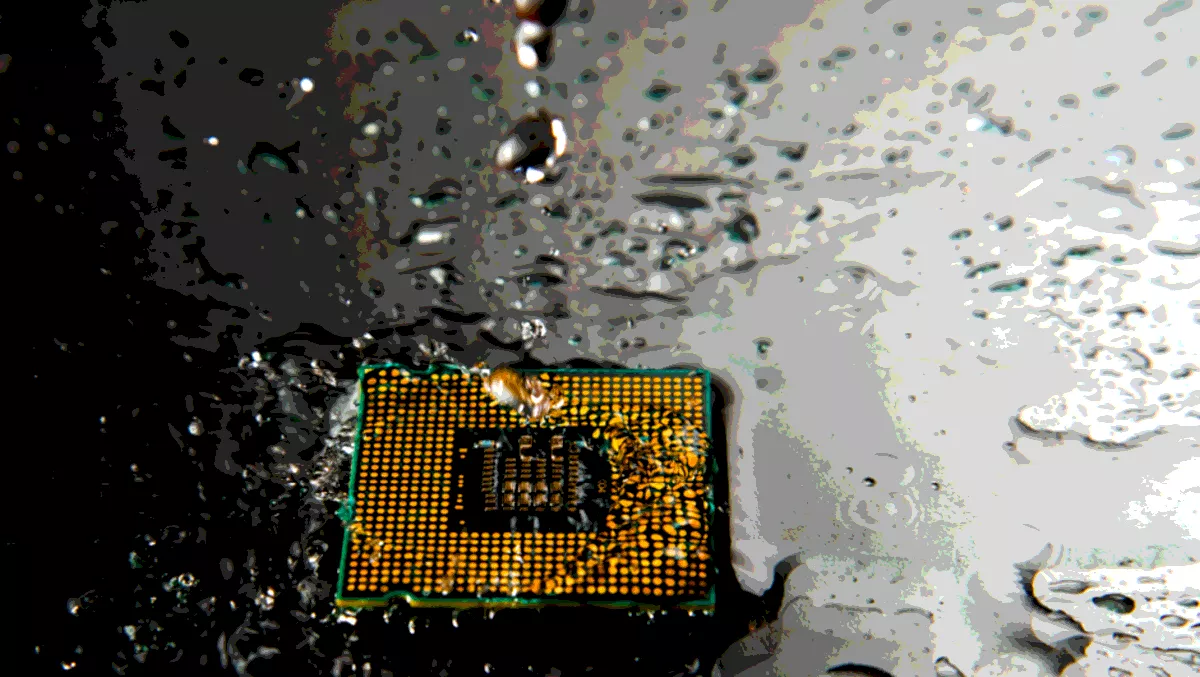

That is why we are seeing a renaissance of sorts with liquid cooling. Liquid cooling has been kicking around in niche applications for high performance computing (HPC) applications for a while, but the new core applications for AI are putting increased demands in a much bigger way.

The benefits of liquid cooling technology can be the ability to deploy at a much higher density, a lower overall TCO driven by increased efficiency, and a greatly reduced footprint.

While the benefits are impressive, the barriers are somewhat daunting.

Liquid cooling has high complexity due to new server chassis designs, manifolds, piping and liquid flow rate management. There is fear of leakage from these complex systems of pipes and fittings and there is a real concern about maintaining the serviceability of servers, especially at scale.

More data center design advancements to come, just like liquid cooling

Traditionalists will argue that it may not make sense to switch to liquid cooling and we can stretch core cooling technologies.

Remember, people argued that we didn't need motorised cars and we just needed faster horses!

Or more recently, people argued that Porsche should never water cool the engine in a 911 and were outraged when it happened in 1998.

This outrage has since subsided and there is now talk about a hybrid electric 911. As in cars, technology in the data center continues to advance and will continue indefinitely.